Leverpoint Labs

Mission: Better tools for important decisions

Policy and government decision-making can benefit from all the analytical support it can get. Even the best-resourced institutions are challenged by the task of navigating volatile geopolitical environments, complex social issues, fast-moving crises, or the need to make infrastructure decisions with multi-decadal timelines in the face of deep uncertainty about the future.

Advances in AI promise to dramatically expand the amount of cognition institutions have available to help navigate hard problems. But implementation and integration lags behind the frontier of possibility.

Our goal is to bring the power of advanced AI capabilities to bear on these challenges. We build software to help institutions make well-calibrated decisions in the face of uncertainty, complexity, and rapid change. We aim to use AI to augment human sensemaking, agency, and judgement — offering additional analytical capacity, not automating decision-making; and clarity around tradeoffs, not value judgements.

Realizing the potential of AI for policy and government

The benefits could be transformational:

Abundant cognition applied to every issue and best-in-class decision support at the click of a button

Adaptive and responsive planning, policymaking, and regulation

Decisionmaking based on comprehensive evidence and informed by high-bandwidth data pipelines and broad democratic input

Greater strategic coherence and transparency across institutional decision-making

Etc.

Meanwhile, the risks in question are sobering:

Analytical process risks such as specious coherence, compounding errors, and opaque reasoning

Human-computer interaction risks around automation bias (inappropriate deference to machine output), human deskilling, and over-reliance on AI outputs

Sociotechnical risks around democratic illegitimacy, value lock-in and model monoculture, and sidelining of tacit knowledge or stakeholder input

Data and cybersecurity risks

Etc.

What would it actually look like to maximize the benefits and minimize the risks of AI in policy contexts?

It’s not yet clear — more work is needed to facilitate near-term adoption of the frontier of beneficial capabilities, while monitoring risks, developing greater understanding of them, and testing practical mitigation strategies. To avoid a late, rushed, and uninformed adoption of increasingly powerful AI systems, developers, researchers, and institutions should be experimenting and learning now.

These questions cannot be put off; LLMs are being used for analysis and decision support in government already (both over, and under, the table).

This often takes place via chatbots without specialised scaffolding, orchestration, UXs, benchmarking, or calibration. This status quo both creates unnecessary risk, and leaves potential utility on the table.

The future of AI in policy will not be just off-the-shelf chatbots, but rather specialist software — enabling both increasingly useful tools and their responsible adoption, such as:

Digital twins and policy simulation engines

Evidence-synthesis and literature-review tools

AI-driven forecasting and early-warning systems

Legal and regulatory copilots, and law-as-code systems

And much more.

Leverpoint Labs: AI tools for strategic foresight

We’re building tools to support foresight-informed policy and decision-making under uncertainty.

These tools help users make sense of complex issues and how they may play out in possible futures, project the possible impacts of policy interventions (and accurately calibrate levels of uncertainty), develop strategies robust to uncertainty and change, and identify indicators to monitor and adjust to as time passes.

Within this broader space, specific capabilities include: forecasting, exploratory and multi-domain modeling, strategic foresight and scenario planning, and stress-testing/red-teaming.

We see clear potential for AI to significantly uplift all of these capabilities — in speed, scale, comprehensiveness, analytical depth, decision-relevance, and ease of application.

We also see AI as offering the chance to integrate these techniques into flexible systems able to provide appropriate analytical support given differing degrees of uncertainty, time-horizons, data availability, and user needs.

Capabilities and products:

Probabilistic scenario planning software

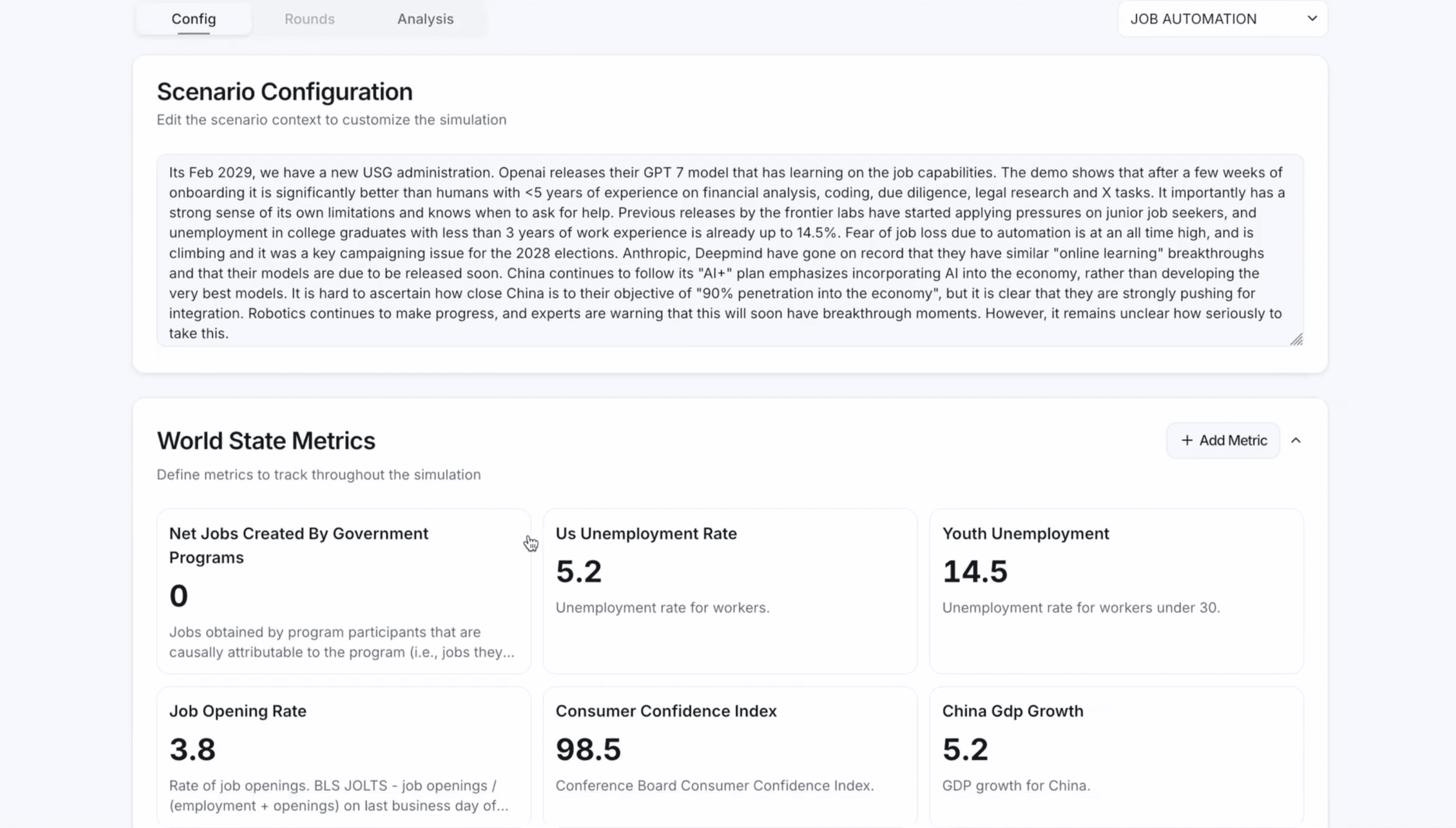

We are developing an AI-powered strategic foresight system integrating scenario planning and forecasting.

Similar to traditional scenario planning, this system can be used to make sense of an issue, identify key uncertainties, map possible trajectories, and explore potential futures.

Rapidly improving LLM forecasting performance offers the prospect of an abundance of well-calibrated forecasting capacity — allowing us to ground scenarios in probabilities, where applicable.

Scenario analysis deep-dive tool

Our software enables quick, deeper, rigorous exploration of potential scenarios.

Taking a given scenario, outcome, or dynamic as a starting point, the system stress-tests its assumptions, evaluates its coherence, provides details upon possible pathways leading to it, and runs forecasts upon key drivers.

This offers users a better grounded and more detailed analysis of that future possibility, an understanding of its major drivers, a probabilistic sense of the likelihood of key features, and more clarity on preparatory strategies, levers and intervention points.

“Live Scenarios”

Of interest to: Research organizations publishing or communicating scenario research

We are working with research organizations on “Live Scenarios,” leveraging AI to make scenario research more interactive and applicable to the consumers of that research.

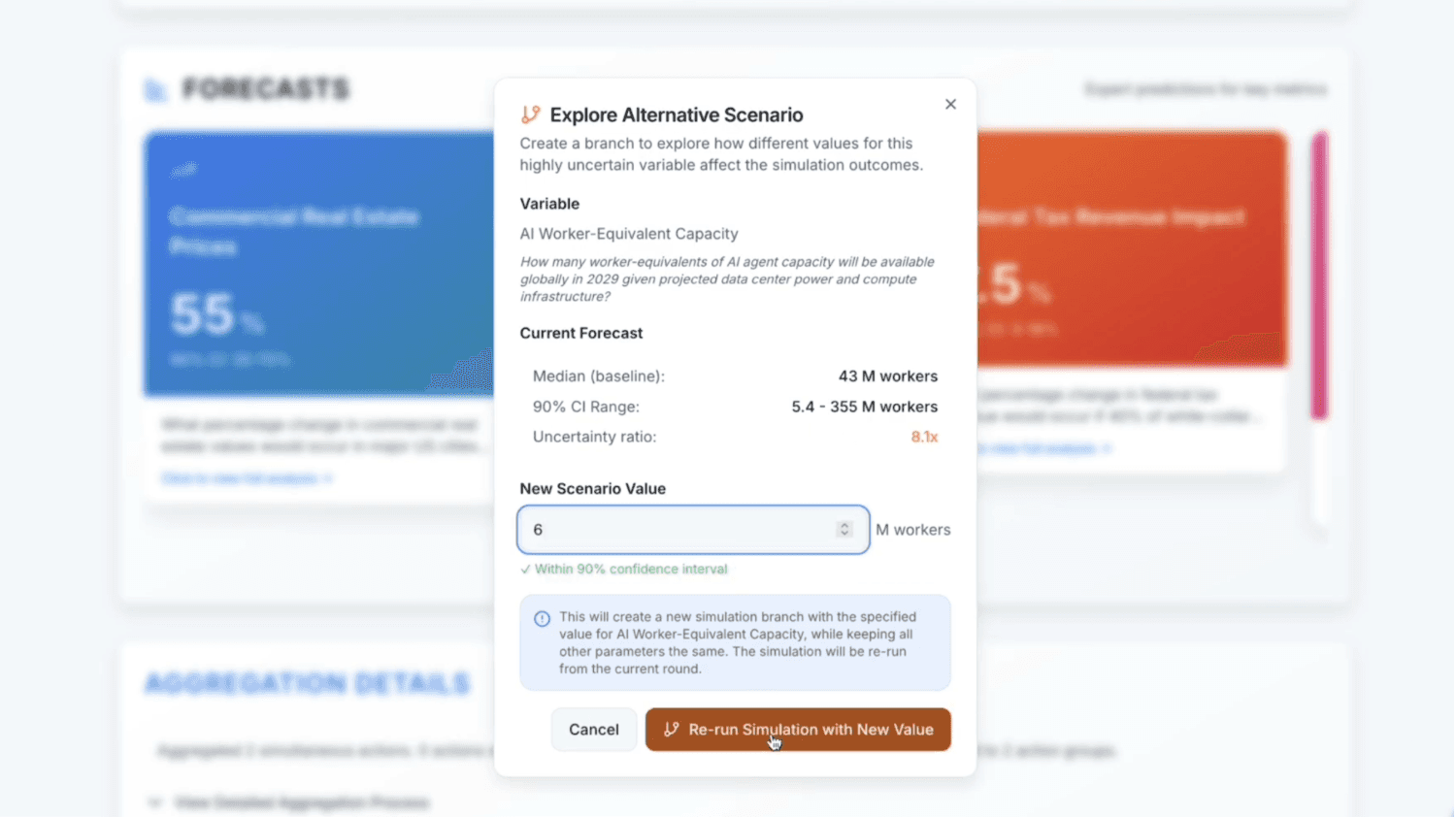

Rather than static reports, users can probe the reasoning behind any assumption, and branch into sub-scenarios by asking "what if" — testing how outcomes change if an assumption shifts or a policy intervention is introduced. The system plays out these branches using the forecasting and simulation capabilities described above, grounding exploration in calibrated probabilities rather than narrative plausibility alone.

While static scenarios are consumed passively, live scenarios offer interaction, building intuition for the underlying dynamics. Users can interrogate why a scenario unfolds as it does, trace causal chains, and challenge assumptions they find doubtful. This develops earnt trust in the analysis, through active scrutiny rather than deference.

The goal of foresight work is ultimately to shift how decision-makers think; that shift happens more reliably when they have stress-tested the logic themselves than when they've read a PDF.

Tabletop Exercise Engine

Of interest to: Developers and facilitators of wargames and scenario exercises; strategists and analysts interested in on-demand exercises.

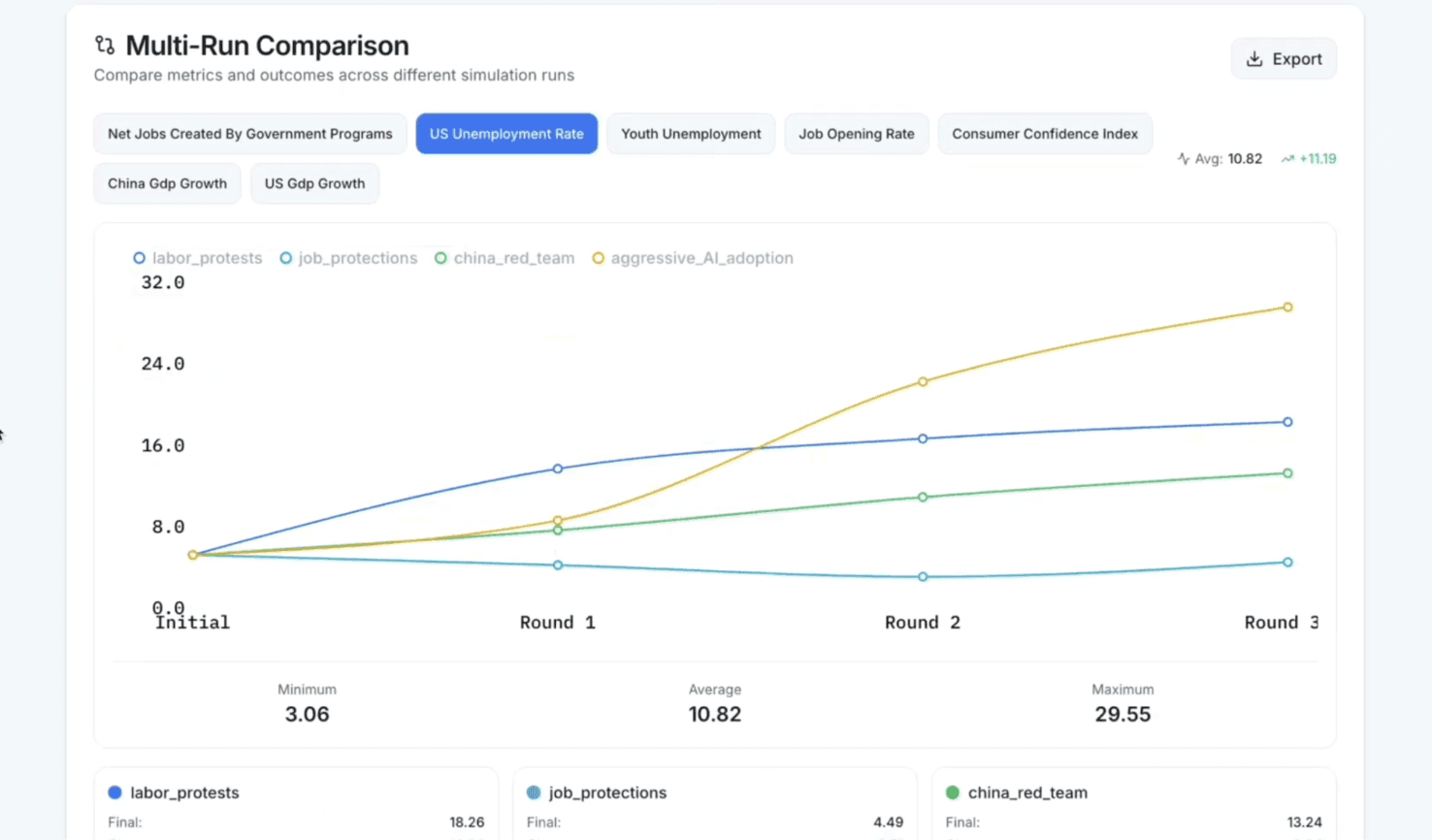

Our system allows for any scenario to be taken as a starting point for a dynamic tabletop exercise, played against others or AIs.

Starting assumptions can be adjusted to play out different scenarios, and different stakeholders can be added. The world state updates between rounds, and different strategies can be compared against one another.

The ability to play out a scenario in a coherent, analytically-sound way, is key to both our general vision, and the particular use case of wargame adjudication. We developed an adjudication system as the basis for a collaboration with one of our design partners.

Previously, such an exercise would have required teams of experts in the room, months of preparation, and hundreds or thousands of total hours of participant time to play out.

Of course, limitations of current AI models mean the system’s performance may not consistently meet expert standards; we’re continuing to iterate upon this, and the immediate application we see here is for hybrid human-AI adjudication — making it radically easier for experts to adjudicate these exercises, while maintaining human oversight.

Custom projects

We’re open to adapting these systems for the particular needs of more users involved in foresight, policy, strategy, wargaming, or related fields.

Please contact info@leverpoint.ai to discuss collaborations or for a demo of our software.

Tabletop Exercise Engine

Leverpoint Labs

Mission: Better tools for important decisions

Policy and government decision-making can benefit from all the analytical support it can get. Even the best-resourced institutions are challenged by the task of navigating volatile geopolitical environments, complex social issues, fast-moving crises, or the need to make infrastructure decisions with multi-decadal timelines in the face of deep uncertainty about the future.

Advances in AI promise to dramatically expand the amount of cognition institutions have available to help navigate hard problems. But implementation and integration lags behind the frontier of possibility.

Our goal is to bring the power of advanced AI capabilities to bear on these challenges. We build software to help institutions make well-calibrated decisions in the face of uncertainty, complexity, and rapid change. We aim to use AI to augment human sensemaking, agency, and judgement — offering additional analytical capacity, not automating decision-making; and clarity around tradeoffs, not value judgements.

Realizing the potential of AI for policy and government

The benefits could be transformational:

Abundant cognition applied to every issue and best-in-class decision support at the click of a button

Adaptive and responsive planning, policymaking, and regulation

Decisionmaking based on comprehensive evidence and informed by high-bandwidth data pipelines and broad democratic input

Greater strategic coherence and transparency across institutional decision-making

Etc.

Meanwhile, the risks in question are sobering:

Analytical process risks such as specious coherence, compounding errors, and opaque reasoning

Human-computer interaction risks around automation bias (inappropriate deference to machine output), human deskilling, and over-reliance on AI outputs

Sociotechnical risks around democratic illegitimacy, value lock-in and model monoculture, and sidelining of tacit knowledge or stakeholder input

Data and cybersecurity risks

Etc.

What would it actually look like to maximize the benefits and minimize the risks of AI in policy contexts?

It’s not yet clear — more work is needed to facilitate near-term adoption of the frontier of beneficial capabilities, while monitoring risks, developing greater understanding of them, and testing practical mitigation strategies. To avoid a late, rushed, and uninformed adoption of increasingly powerful AI systems, developers, researchers, and institutions should be experimenting and learning now.

These questions cannot be put off; LLMs are being used for analysis and decision support in government already (both over, and under, the table).

This often takes place via chatbots without specialised scaffolding, orchestration, UXs, benchmarking, or calibration. This status quo both creates unnecessary risk, and leaves potential utility on the table.

The future of AI in policy will not be just off-the-shelf chatbots, but rather specialist software — enabling both increasingly useful tools and their responsible adoption, such as:

Digital twins and policy simulation engines

Evidence-synthesis and literature-review tools

AI-driven forecasting and early-warning systems

Legal and regulatory copilots, and law-as-code systems

And much more.

Leverpoint Labs: AI tools for strategic foresight

We’re building tools to support foresight-informed policy and decision-making under uncertainty.

These tools help users make sense of complex issues and how they may play out in possible futures, project the possible impacts of policy interventions (and accurately calibrate levels of uncertainty), develop strategies robust to uncertainty and change, and identify indicators to monitor and adjust to as time passes.

Within this broader space, specific capabilities include: forecasting, exploratory and multi-domain modeling, strategic foresight and scenario planning, and stress-testing/red-teaming.

We see clear potential for AI to significantly uplift all of these capabilities — in speed, scale, comprehensiveness, analytical depth, decision-relevance, and ease of application.

We also see AI as offering the chance to integrate these techniques into flexible systems able to provide appropriate analytical support given differing degrees of uncertainty, time-horizons, data availability, and user needs.

Capabilities and products:

Probabilistic scenario planning software

We are developing an AI-powered strategic foresight system integrating scenario planning and forecasting.

Similar to traditional scenario planning, this system can be used to make sense of an issue, identify key uncertainties, map possible trajectories, and explore potential futures.

Rapidly improving LLM forecasting performance offers the prospect of an abundance of well-calibrated forecasting capacity — allowing us to ground scenarios in probabilities, where applicable.

Scenario analysis deep-dive tool

Our software enables quick, deeper, rigorous exploration of potential scenarios.

Taking a given scenario, outcome, or dynamic as a starting point, the system stress-tests its assumptions, evaluates its coherence, provides details upon possible pathways leading to it, and runs forecasts upon key drivers.

This offers users a better grounded and more detailed analysis of that future possibility, an understanding of its major drivers, a probabilistic sense of the likelihood of key features, and more clarity on preparatory strategies, levers and intervention points.

“Live Scenarios”

Of interest to: Research organizations publishing or communicating scenario research

We are working with research organizations on “Live Scenarios,” leveraging AI to make scenario research more interactive and applicable to the consumers of that research.

Rather than static reports, users can probe the reasoning behind any assumption, and branch into sub-scenarios by asking "what if" — testing how outcomes change if an assumption shifts or a policy intervention is introduced. The system plays out these branches using the forecasting and simulation capabilities described above, grounding exploration in calibrated probabilities rather than narrative plausibility alone.

While static scenarios are consumed passively, live scenarios offer interaction, building intuition for the underlying dynamics. Users can interrogate why a scenario unfolds as it does, trace causal chains, and challenge assumptions they find doubtful. This develops earnt trust in the analysis, through active scrutiny rather than deference.

The goal of foresight work is ultimately to shift how decision-makers think; that shift happens more reliably when they have stress-tested the logic themselves than when they've read a PDF.

Tabletop Exercise Engine

Of interest to: Developers and facilitators of wargames and scenario exercises; strategists and analysts interested in on-demand exercises.

Our system allows for any scenario to be taken as a starting point for a dynamic tabletop exercise, played against others or AIs.

Starting assumptions can be adjusted to play out different scenarios, and different stakeholders can be added. The world state updates between rounds, and different strategies can be compared against one another.

The ability to play out a scenario in a coherent, analytically-sound way, is key to both our general vision, and the particular use case of wargame adjudication. We developed an adjudication system as the basis for a collaboration with one of our design partners.

Previously, such an exercise would have required teams of experts in the room, months of preparation, and hundreds or thousands of total hours of participant time to play out.

Of course, limitations of current AI models mean the system’s performance may not consistently meet expert standards; we’re continuing to iterate upon this, and the immediate application we see here is for hybrid human-AI adjudication — making it radically easier for experts to adjudicate these exercises, while maintaining human oversight.

Custom projects

We’re open to adapting these systems for the particular needs of more users involved in foresight, policy, strategy, wargaming, or related fields.

Please contact info@leverpoint.ai to discuss collaborations or for a demo of our software.

Tabletop Exercise Engine

Leverpoint Labs

Mission: Better tools for important decisions

Policy and government decision-making can benefit from all the analytical support it can get. Even the best-resourced institutions are challenged by the task of navigating volatile geopolitical environments, complex social issues, fast-moving crises, or the need to make infrastructure decisions with multi-decadal timelines in the face of deep uncertainty about the future.

Advances in AI promise to dramatically expand the amount of cognition institutions have available to help navigate hard problems. But implementation and integration lags behind the frontier of possibility.

Our goal is to bring the power of advanced AI capabilities to bear on these challenges. We build software to help institutions make well-calibrated decisions in the face of uncertainty, complexity, and rapid change. We aim to use AI to augment human sensemaking, agency, and judgement — offering additional analytical capacity, not automating decision-making; and clarity around tradeoffs, not value judgements.

Realizing the potential of AI for policy and government

The benefits could be transformational:

Abundant cognition applied to every issue and best-in-class decision support at the click of a button

Adaptive and responsive planning, policymaking, and regulation

Decisionmaking based on comprehensive evidence and informed by high-bandwidth data pipelines and broad democratic input

Greater strategic coherence and transparency across institutional decision-making

Etc.

Meanwhile, the risks in question are sobering:

Analytical process risks such as specious coherence, compounding errors, and opaque reasoning

Human-computer interaction risks around automation bias (inappropriate deference to machine output), human deskilling, and over-reliance on AI outputs

Sociotechnical risks around democratic illegitimacy, value lock-in and model monoculture, and sidelining of tacit knowledge or stakeholder input

Data and cybersecurity risks

Etc.

What would it actually look like to maximize the benefits and minimize the risks of AI in policy contexts?

It’s not yet clear — more work is needed to facilitate near-term adoption of the frontier of beneficial capabilities, while monitoring risks, developing greater understanding of them, and testing practical mitigation strategies. To avoid a late, rushed, and uninformed adoption of increasingly powerful AI systems, developers, researchers, and institutions should be experimenting and learning now.

These questions cannot be put off; LLMs are being used for analysis and decision support in government already (both over, and under, the table).

This often takes place via chatbots without specialised scaffolding, orchestration, UXs, benchmarking, or calibration. This status quo both creates unnecessary risk, and leaves potential utility on the table.

The future of AI in policy will not be just off-the-shelf chatbots, but rather specialist software — enabling both increasingly useful tools and their responsible adoption, such as:

Digital twins and policy simulation engines

Evidence-synthesis and literature-review tools

AI-driven forecasting and early-warning systems

Legal and regulatory copilots, and law-as-code systems

And much more.

Leverpoint Labs: AI tools for strategic foresight

We’re building tools to support foresight-informed policy and decision-making under uncertainty.

These tools help users make sense of complex issues and how they may play out in possible futures, project the possible impacts of policy interventions (and accurately calibrate levels of uncertainty), develop strategies robust to uncertainty and change, and identify indicators to monitor and adjust to as time passes.

Within this broader space, specific capabilities include: forecasting, exploratory and multi-domain modeling, strategic foresight and scenario planning, and stress-testing/red-teaming.

We see clear potential for AI to significantly uplift all of these capabilities — in speed, scale, comprehensiveness, analytical depth, decision-relevance, and ease of application.

We also see AI as offering the chance to integrate these techniques into flexible systems able to provide appropriate analytical support given differing degrees of uncertainty, time-horizons, data availability, and user needs.

Capabilities and products:

Probabilistic scenario planning software

We are developing an AI-powered strategic foresight system integrating scenario planning and forecasting.

Similar to traditional scenario planning, this system can be used to make sense of an issue, identify key uncertainties, map possible trajectories, and explore potential futures.

Rapidly improving LLM forecasting performance offers the prospect of an abundance of well-calibrated forecasting capacity — allowing us to ground scenarios in probabilities, where applicable.

Scenario analysis deep-dive tool

Our software enables quick, deeper, rigorous exploration of potential scenarios.

Taking a given scenario, outcome, or dynamic as a starting point, the system stress-tests its assumptions, evaluates its coherence, provides details upon possible pathways leading to it, and runs forecasts upon key drivers.

This offers users a better grounded and more detailed analysis of that future possibility, an understanding of its major drivers, a probabilistic sense of the likelihood of key features, and more clarity on preparatory strategies, levers and intervention points.

“Live Scenarios”

Of interest to: Research organizations publishing or communicating scenario research

We are working with research organizations on “Live Scenarios,” leveraging AI to make scenario research more interactive and applicable to the consumers of that research.

Rather than static reports, users can probe the reasoning behind any assumption, and branch into sub-scenarios by asking "what if" — testing how outcomes change if an assumption shifts or a policy intervention is introduced. The system plays out these branches using the forecasting and simulation capabilities described above, grounding exploration in calibrated probabilities rather than narrative plausibility alone.

While static scenarios are consumed passively, live scenarios offer interaction, building intuition for the underlying dynamics. Users can interrogate why a scenario unfolds as it does, trace causal chains, and challenge assumptions they find doubtful. This develops earnt trust in the analysis, through active scrutiny rather than deference.

The goal of foresight work is ultimately to shift how decision-makers think; that shift happens more reliably when they have stress-tested the logic themselves than when they've read a PDF.

Tabletop Exercise Engine

Of interest to: Developers and facilitators of wargames and scenario exercises; strategists and analysts interested in on-demand exercises.

Our system allows for any scenario to be taken as a starting point for a dynamic tabletop exercise, played against others or AIs.

Starting assumptions can be adjusted to play out different scenarios, and different stakeholders can be added. The world state updates between rounds, and different strategies can be compared against one another.

The ability to play out a scenario in a coherent, analytically-sound way, is key to both our general vision, and the particular use case of wargame adjudication. We developed an adjudication system as the basis for a collaboration with one of our design partners.

Previously, such an exercise would have required teams of experts in the room, months of preparation, and hundreds or thousands of total hours of participant time to play out.

Of course, limitations of current AI models mean the system’s performance may not consistently meet expert standards; we’re continuing to iterate upon this, and the immediate application we see here is for hybrid human-AI adjudication — making it radically easier for experts to adjudicate these exercises, while maintaining human oversight.

Custom projects

We’re open to adapting these systems for the particular needs of more users involved in foresight, policy, strategy, wargaming, or related fields.

Please contact info@leverpoint.ai to discuss collaborations or for a demo of our software.

Tabletop Exercise Engine :